The Mid-Funnel Dead Zone: How to Turn MQLs into Meetings with AI and Humans

Mid-market B2B teams do not need more leads. They need more meetings from the leads they already have. This playbook shows how to fix the MQL to SQL gap with shared definitions, faster first touch, signal based prioritization, and AI with human review.

Summary

Most pipelines do not break at the top. They decay between MQL and SQL due to slow response, fuzzy handoffs, and generic nurture. The fix is simple to describe but hard to run: shared definitions and an SLA, sub five minute response, unified signals across web and CRM, and AI drafted messages that humans approve. Use this guide to set benchmarks, run a 30 day rollout, and measure lift in qualified replies, meetings, and SQL rate.

Playbook in five moves

Agree on MQL, SAL, SQL and an SLA

Reach sub five minute response time [1]

Unify signals across web, intent, and CRM

Let AI suggest, humans approve

Track qualified replies and meetings, not opens

What is the mid-funnel dead zone and how big is it?

The mid-funnel dead zone is the period after marketing qualifies a lead and before sales accepts it. It is where leads stall because the first touch is slow, handoffs are unclear, or messaging feels generic. At the same time buyers self serve longer and avoid irrelevant outreach, especially when they prefer rep free research. [2]

Where decay happens

No written MQL to SQL SLA

First response slower than five minutes

Nurture that ignores behavior and intent signals

Data silos that hide buyer and account activity

MQL to SQL benchmarks by industry

B2B SaaS | Fintech | Manufacturing | Healthcare |

|---|---|---|---|

Benchmark: 13% | Benchmark: 11% | Benchmark: 16% | Benchmark: 13% |

Source: FirstPageSage 2025 [6] | Source: FirstPageSage 2025 [6] | Source: FirstPageSage 2025 [6] | Source: FirstPageSage 2025 [6] |

Notes: Client data 2019–2024 | Notes: Longer consensus cycles | Notes: Budget and scoping friction | Notes: Compliance heavy |

Caption

Benchmarks summarized from FirstPageSage’s 2025 report. Use your baseline to set targets by source.

Why do MQL to SQL handoffs fail?

No shared definitions

Marketing calls the lead qualified. Sales calls it early. Without a single written SLA, the pipeline becomes political. Create a one page SLA that spells out MQL, SAL, SQL, required fields, and response time. Review it every week.

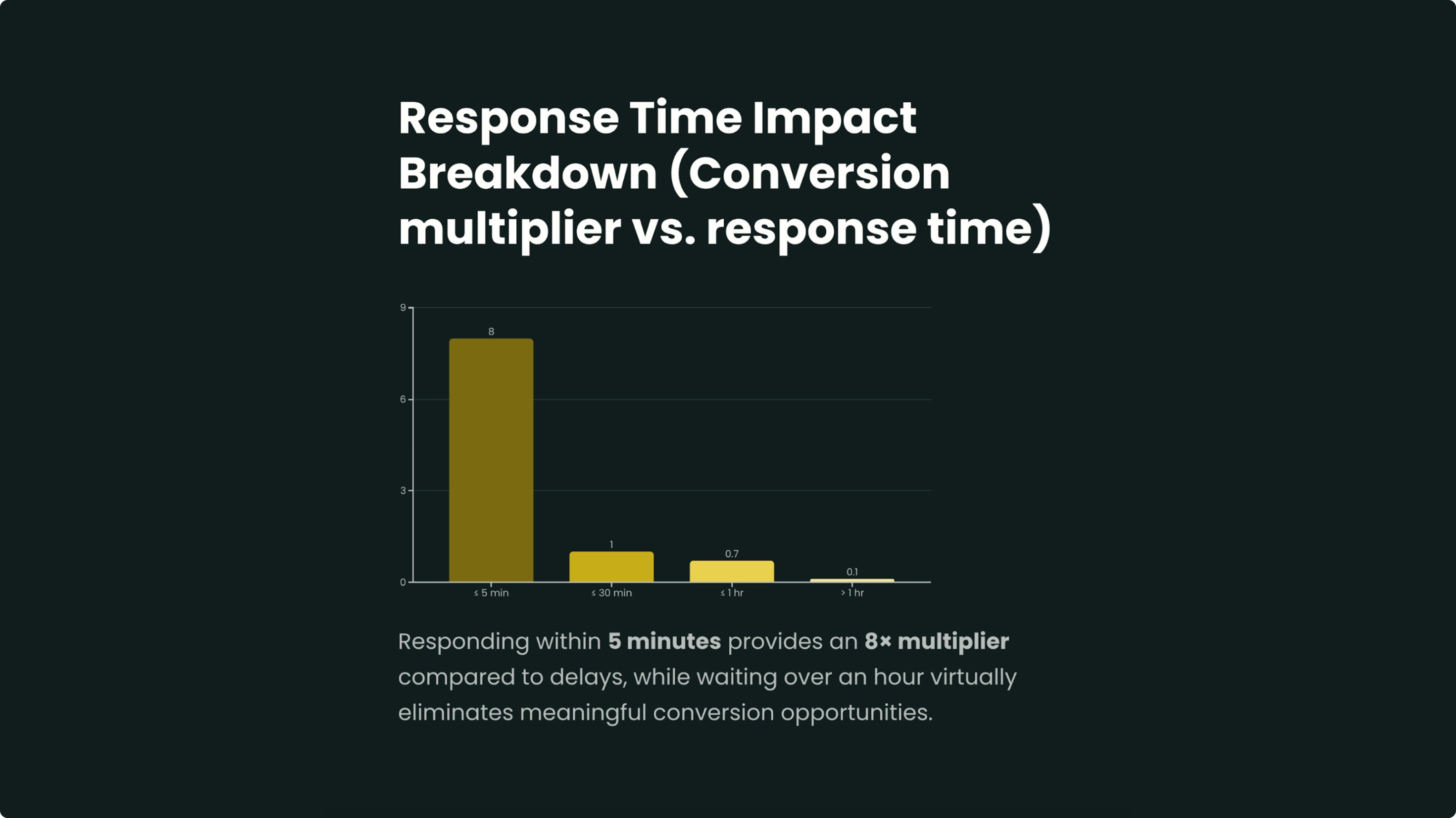

Slow first touch

Leads contacted within five minutes qualify far more often than leads worked later. Classic research shows odds of contact and qualification drop sharply after five and thirty minutes. [1] Treat a sub five minute response as a non negotiable standard, not an aspiration.

Generic nurture and data silos

Disconnected tools hide behavior that should guide timing and content. Buyers want to research on their own and avoid irrelevant outreach. [2] When nurture programs ignore signals, they train buyers to ignore future messages too.

How AI actually helps: no magic, just mechanics

Signal ingestion

Start by unifying open web, first party, and CRM history. Normalize job changes, intent surges, content engagement, and past interactions across accounts and people. Buying groups now often include 7 to 13 stakeholders, so signal sprawl is the norm. [3]

Predictive prioritization

Personalization at scale with humans in the loop

AI can draft a first touch based on signals and past wins. Humans keep tone, ethics, and context on track. The most durable pattern is simple: machine suggests, human approves. That protects brand voice while shrinking ramp time and response lag.

A 30 day rollout real teams can actually run

Phase | Days | Focus | Key Metrics |

|---|---|---|---|

Baseline | 1–7 | Benchmark MQL to SQL, response time, reply quality | Control data set |

Alignment | 8–14 | Define MQL and SQL with SLA, map signal sources | SLA signed, sources mapped |

Build | 15–21 | Create three persona sequences across email and LinkedIn, add review gates | Sequence QA complete |

Launch and learn | 22–30 | Run tests and weekly readouts on replies and meetings | Lift in reply rate and meetings |

What sub five minute response really requires

Routing rules with owner backups

Slack or email alerts tied to new MQL creation

CRM tasks auto created with timers and clear owners

What to measure to prove lift

Qualified reply rate

Share of replies that match ICP and show clear intent.

Meetings booked

Meetings from nurtured leads per week.

Time to first meeting

Days from MQL to first meeting.

SQL rate by source

SQL conversion by channel to show quality, not just volume.

Guardrail: deprioritize vanity opens and clicks. Focus on actions that link to revenue.

Risks and guardrails

Risk | Reality check | Mitigation |

|---|---|---|

Noisy intent | Not all intent data reflects real buying motion | Use human review gates and pair intent with real engagement |

Model drift | Accuracy fades each quarter as markets shift | Retrain at least every 90 days and monitor score quality |

Over automation fatigue | Buyers can feel when sequences are robotic | Mandate human edits on tone, structure, and key moments |

FAQ

What is a good MQL to SQL conversion rate?

It depends on your industry and channels. Start with your current baseline, then compare to the ranges above. As a directional guide, B2B SaaS often sits near 13 percent and manufacturing near 16 percent. [6]

Does responding within five minutes really matter?

Yes. Odds of contact and qualification fall sharply after five minutes. The specific day or hour matters less than how quickly you follow up in that first window. [1]

Is predictive lead scoring worth it?

How do we measure improvement without vanity metrics?

Focus on qualified reply rate, meetings booked, time to first meeting, and SQL rate by source. Use opens and clicks only as supporting signals.

Will AI replace SDRs?

No. Buyers still want competent human interactions at key points. AI shortens time to relevance, handles research, and drafts options. Humans decide what to send and when to pick up the phone.

References

Oldroyd, J. et al. The Short Life of Online Sales Leads. Harvard Business Review. Classic study on response time and qualification odds. hbr.org/2011/03/the-short-life-of-online-sales-leads.

Gartner Press Release (June 25, 2025). Survey data on B2B preference for rep free buying and avoidance of irrelevant outreach. gartner.com/.../rep-free-buying-experience.

Forrester, State of Business Buying 2024 (summary). Average buying group size and committee dynamics. influ2.com/academy/buying-committees .

Wu, M. (2024). Amplifying the Power of Predictive Lead Scoring in B2B. PACIS 2024. aisel.aisnet.org/pacis2024/.../3/ .

González Flores, L. (2025). The relevance of lead prioritization: a B2B lead scoring model. Frontiers in AI. pmc.ncbi.nlm.nih.gov/articles/PMC11925937/ .

FirstPageSage (Oct 3, 2024). MQL to SQL Conversion Rate by Industry: 2025 Report. firstpagesage.com/.../mql-to-sql-conversion-rate-by-industry/ .

Dec 8, 2025